AI Surveillance Technology: Going Too Far for Public Safety?

Artificial intelligence (AI) has evolved from a concept of science fiction to a cornerstone of modern life. AI surveillance technology—once confined to the pages of dystopian novels—has reshaped public safety protocols.

Real-time threat detection, faster incident response, and reduced crime rates are just a few advantages. But while the benefits are clear, the drawbacks of AI-powered mass surveillance raise some critical questions. Issues around data privacy, social justice, and potential misuse abound.

As machine learning (ML) and generative AI advance at breakneck speed, governments—often risk-averse and slow-moving—face mounting pressure. Are they doing enough to address the ethical and regulatory challenges posed by AI surveillance technology?

In 2016, a year-long investigation by the Georgetown Law Center on Privacy and Technology highlighted some alarming realities. It revealed that nearly half of all American adults have their images stored in law enforcement facial recognition networks.

So, where do we draw the line?

Does public safety trump personal liberty? Is AI surveillance technology an essential advancement for societal progress? Or is it a perilous path toward Orwellian overreach?

This article intends to answer these questions. Read on to learn more about:

- The evolution of AI-powered video surveillance for public safety.

- The technology behind these security systems.

- The real-world benefits of AI surveillance technology.

- AI privacy concerns and ethical considerations.

- The current legal framework and AI regulatory controls.

The Rise of AI-Powered Surveillance in Public Spaces

Closed-circuit television (CCTV) cameras have been around since the World War II era. But it wasn’t until 1968 that they entered the public sphere. Olean, New York, was the first US city to implement CCTV surveillance for civilian purposes.

Three decades later, the Defense Advanced Research Projects Agency (DARPA) launched ARGUS-IS, an AI-powered imaging system. Initially confined to military use, “the world’s most advanced video imaging device” marked a significant leap forward. It laid the foundation for modern surveillance technology.

Fast-forward to the present day.

Machine learning security and surveillance systems have expanded far beyond military use, penetrating civilian life at an unprecedented scale. Here are some examples illustrating how AI-powered video technology has been implemented across the globe:

- United States

AI surveillance technology includes gun detection, crowd detection, gunshot detection, and facial recognition. Authorities also use biometric technology and predictive policing tools.

- China

With security systems like Skynet’s 200+ million cameras, China leads in AI surveillance technology—and social control.

- Europe

London’s Live Facial Recognition (LFR) demonstrates growing AI use for public safety. INTERPOL also uses facial recognition technology (FRT) for international crime tracking.

- India

SAFE-CITY projects are designed to monitor public spaces to reduce violence against women. AI technology integrated with the Aadhaar biometric system also aids in identity verification and fraud prevention.

- Middle East

The UAE’s Falcon Eye system monitors Abu Dhabi’s urban activities. Saudi Arabia also uses AI for smart border surveillance with facial recognition and object detection.

The rapid adoption of artificial intelligence for video surveillance shows no signs of slowing down. But what innovations make this kind of AI technology possible?

The Technology Behind AI-Powered Surveillance

Thanks to modern AI, today’s video surveillance systems have become powerful tools. They offer a vast range of clear-cut benefits, including real-time insights, enhanced security, and increased efficiency.

Yet, for many, these technologies may seem like something out of a Sci-Fi movie.

A 2023 Pew Research Center survey found that 42% of Americans had never heard of ChatGPT. Meanwhile, only 33% felt they had a comprehensive understanding of artificial intelligence. These statistics highlight the gap between AI’s rapid integration into daily life and public awareness of its capabilities.

That’s why a deeper understanding of AI surveillance technology is necessary. How else can the public truly grasp the benefits, risks, and implications?

The Building Blocks of AI Surveillance Technology: How Advanced Systems Work

Generally speaking, AI-powered surveillance systems rely on a sophisticated fusion of technologies. These include:

- Artificial Neural Networks (ANNs)

ANNs mimic brain function to analyze patterns for tasks like anomaly detection and facial recognition. TechTarget’s 2-minute video offers a simple explanation:

- Generative Adversarial Networks (GANs)

GANs use two competing networks to create realistic synthetic data. In AI surveillance technology, GANs improve training models and augment datasets for better system performance. Computer scientist Jeff Heaton explores GANs further in this video:

- Computer Vision

Computer vision analyzes and interprets visual or video data for tasks like object detection, scene understanding, feature extraction, and image recognition. Surveillance solutions use it to detect anomalies or weapons, for example. Here’s a quick explainer video by Professor Shree Nayar of Columbia University:

- Machine Learning

ML enables AI surveillance technology to continuously learn and improve. It also supports predictive analytics and behavior forecasting. Jeff Crume of IBM Technology maps this concept out nicely in his short video:

- Deep Learning

Deep learning AI algorithms process complex data with neural networks. They power high-accuracy tasks like video analytics for image recognition. Google’s Jeff Su explains more in this AI crash course:

- Natural Language Processing (NLP)

NLP analyzes text and speech to detect audio anomalies. Identifying keywords in public conversations is one use case example. Master Inventor at IBM, Martin Keen, defines NLP in this video:

- Edge Computing

Edge computing processes data collected on local devices to reduce latency for real-time analysis. For instance, smart cameras that analyze live video streams use this kind of AI surveillance technology. Accenture simplifies the concept in their short video:

- Cloud Computing

Cloud computing enables scalable storage, real-time processing, and remote management of surveillance systems. It also facilitates the integration of legacy hardware with AI technologies. Here’s Amazon’s simple explainer:

- Biometric Technology

Biometric systems identify individuals using traits like fingerprints or facial features. Most organizations use it for access control and public identification. Here’s TechTarget’s take on this technology:

- Thermal Imaging

Thermal imaging technology detects heat, revealing people, vehicles, or objects—even in smoke or fog. Heat mapping takes things a step further. It highlights activity hotspots or unusual temperature spikes. SciShow’s three-minute video has more:

- Data Analytics

Data analytics enable machine learning security and surveillance systems to process large amounts of data. This, in turn, supports predictive surveillance and real-time analysis. For instance, remote guarding solutions rely on AI video analytics for live threat detection. IBM’s Master Inventor Martin Keen explains data analytics in more detail here:

These components power different AI surveillance technologies. Since they’re always evolving, so too are the systems that rely on them. But how are security personnel and law enforcement actually using such systems?

8 Common Types of AI Surveillance Technology

From threat detection to crime prediction tools, AI-driven surveillance has transformed public safety. Here are some key technologies that power these innovative systems:

| Technology | Description | Applications | Examples |

|---|---|---|---|

|

Object Detection and Recognition |

Identifies specific items like weapons or unattended bags to improve situational awareness. |

Improving security in public spaces such as airports, train stations, and large public venues. |

Detects firearms in live video feeds to prevent threats. |

|

Facial Recognition Software |

Analyzes unique facial features to identify individuals. |

Identifying suspects, managing airport security, and tracking individuals in public areas. |

U.S. law enforcement database. |

|

License Plate Recognition (LPR) |

Uses AI security cameras to capture and analyze license plates for law enforcement and traffic management. |

Tracking vehicles, enforcing traffic laws, and real-time monitoring of highways. |

Automatic Number Plate Recognition (ANPR) Widely used in the EU for traffic enforcement. |

|

Video Analytics Platforms |

Processes live footage to extract actionable insights. Reduces reliance on human security teams. |

Monitoring traffic, analyzing crowd density, detecting intruders, crowds, and guns. |

Detects potential threats in real time. |

|

Behavior Analysis Software |

Monitors crowd movements and detects anomalies like unusual or suspicious activities. |

Crowd safety, fraud detection, and identifying criminal behavior in public spaces. |

Predicts criminal actions by analyzing video footage and patterns. |

|

Predictive Surveillance |

Combines historical data with real-time inputs to forecast criminal activity. |

Crime mapping, resource allocation, and threat prevention. |

Identifies high-risk areas for crimes in the U.S. |

|

Autonomous Patrol Systems |

Patrols environments with robots and vehicles equipped with AI to detect anomalies and alert authorities. |

Patrolling urban areas and securing high-risk zones. |

Autonomous vehicles monitor city streets. |

|

AI Integration with IoT Devices |

Integrates AI with IoT devices for centralized monitoring and management across smart cities. |

Coordinating traffic, managing public safety, and supporting urban infrastructure. |

Smart lampposts with AI security cameras, facial recognition, environmental sensors. |

The Benefits of AI Surveillance for Public Safety

Over the past two decades, US violent crime rates have seen a remarkable decline. In 1992, there were 1.93 million recorded incidents, compared with 1.2 million in 2022.

This shift is no coincidence.

During the same period, cities worldwide have adopted cloud technology, robotic process automation, and advanced data analytics for public initiatives. That’s according to Deloitte and ThoughtLab. Their 2024 survey suggests that AI technology will go mainstream within the next three years.

Building on this trend, machine learning security and surveillance systems have emerged as key drivers. Could AI be the silent force shaping safer communities, enabling faster responses, and facilitating smarter resource allocation?

Let’s unpack the positive impacts of this ground-breaking technology.

Smarter Crime Prevention and Detection Capabilities

According to the 2019 AI Global Surveillance (AIGS) Index, at least 75 out of 176 countries actively use AI surveillance technology, such as:

- Smart policing

- Facial recognition systems

- Smart city and safe city platforms

Global cities leverage these cutting-edge solutions to address the complex challenges of public safety. For example, real-time crime mapping pinpoints high-risk areas. Facial recognition software aids investigations. Authorities even use crowd management tools to ensure public safety.

Moreover, AI surveillance technologies like gun detection, intruder detection, and loitering detection enable swift responses to potential threats.

For instance, firearm detection technology can recognize the presence of a weapon within seconds. In crowded or chaotic environments, authorities can intervene before an incident escalates.

Some cities are also exploring AI’s predictive capabilities to analyze video data for enhanced security outcomes. These technologies can reportedly reduce crime by up to 40%.

Real-Time Response and Emergency Management

AI surveillance systems can also address some critical gaps in emergency response and management. One example that comes to mind? FEMA’s response to a few 2024 disasters.

In the wake of Hurricanes Helene and Milton, FEMA received around 900,000 calls for disaster aid. But the organization failed to answer nearly half (47%) of them.

AI can help bridge these gaps. Systems that instantly detect fires, accidents, or security breaches can trigger alerts and expedite response times. Today’s AI technology can even analyze real-time and historical data that supports predictive modeling to preempt crises.

The ability to cut response times by up to 35% makes AI surveillance technology an invaluable asset in emergencies.

Case Studies and Real-World Examples of Success

Machine learning security and surveillance systems are true game-changers. Their impact can be felt across industries, organizations, and communities. Here are three such examples:

Brazil: CrimeRadar App

Facing high crime rates, Rio de Janeiro adopted the CrimeRadar platform, developed by the Igarapé Institute and Via Science. This app uses machine learning algorithms and advanced data analytics to monitor crime patterns and offer predictive insights. CrimeRadar has improved public safety, contributing to a 30–40% reduction in crime across the region.

United States: Actuate AI Threat Detection

False alarms are a pervasive issue for public and private organizations. They obscure real threats. Actuate AI’s video analytics software changes that by turning existing cameras into intelligent devices. And the impact is clear:

Global Guardian saw a 57% drop in false alarms per site. Another company, Envera, experienced the lowest false alarm rate in their history. Optimized resource allocation and accurate threat detection are just two benefits.

United Kingdom: Facial Recognition Technology

In 2024, London’s Metropolitan Police expanded its AI security operations. It scanned 771,000 faces in 117 deployments. This led to over 360 arrests for serious crimes. The AI surveillance technology has a reportedly low false positive rate of 1 in 6,000 and strict privacy safeguards.

AI Privacy Concerns and Ethical Boundaries

Innovation advances at breakneck speed, and artificial intelligence is no exception. As AI becomes more ingrained in mass surveillance, it presents some profound risks.

As Stephen Hawking aptly cautioned:

“Success in creating effective AI could be the biggest event in the history of our civilization. Or the worst.”

The Risks of Overreach and Mass Surveillance

Despite its rapid adoption, the use of artificial intelligence for video surveillance remains largely unregulated. This presents a double-sided challenge:

AI itself lacks adequate regulatory controls, while surveillance oversight remains equally insufficient. Together, these multi-faceted issues present clear risks:

- Erosion of Personal Privacy

Continuous monitoring undermines democratic principles. For example, Amnesty International found that Danish authorities use AI algorithms and mass surveillance in discriminatory ways—like a social scoring system. This sets a dangerous precedent.

- Limiting Free Speech

The perception of mass surveillance alone can lead to self-censorship. Known as “the chilling effect,” this phenomenon stifles freedom of expression and association.

- Potential for Abuse of Power

Without stringent oversight, authorities can misuse surveillance to suppress political opposition and infringe upon civil liberties. A 2023 article published in the Journal of Human Rights Practice explored this issue. It found that the impact of surveillance-induced self-censorship erodes trust and paves the way for “creeping authoritarianism by default.”

The Georgetown Law Center on Privacy and Technology revealed a startling truth in their 2016 report:

Only 1 out of 52 US agencies deploying AI surveillance technology had actually secured legislative approval.

Eight years later, the situation has only worsened.

The Face-Off of Facial Recognition Technology

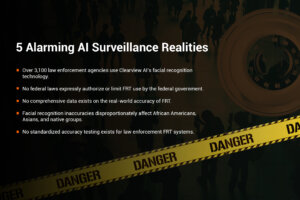

Today, over 3,100 law enforcement agencies use Clearview AI’s facial recognition technology. Yet, no federal laws expressly authorize or limit FRT use by the federal government.

In March 2024, the US Commission on Civil Rights (USCCR) held a public briefing on the use of FRT by three cabinet departments. Officials from the departments of Justice (DOJ), Homeland Security (DHS), and Housing and Urban Development (HUD) all testified.

Clearview AI’s CEO, Hoan Ton-That also testified. He offered anecdotal evidence where FRT played an instrumental role in criminal cases. Ton-That also submitted that authorities don’t use FRT in real time. Rather, it’s a forensic tool used “after the fact.”

Armando Aguilar, Assistant Chief of the Miami Police Department, echoed this sentiment in his testimony. He also explained how his department uses FRT matches:

“Matches are treated like anonymous tips, which must be corroborated by physical, testimonial, or circumstantial evidence.”

Miami PD is one of the few law enforcement agencies with FRT policies and controls in place. Still, the USCCR’s 194-page report exposed some alarming realities:

- The use of facial recognition technology raises issues under certain laws. Yet, these laws don’t directly regulate FRT.

- No comprehensive data exists on the real-world accuracy of FRT. This is particularly true for low-resolution images.

- Facial recognition inaccuracies disproportionately affect African Americans, Asians, and native groups. A 2019 study by the National Institute of Standards and Technology (NIST) corroborates these findings.

- No standardized accuracy testing exists for law enforcement FRT systems. The NIST offers tests, but these are voluntary and conducted in laboratories, not real-world environments.

- No legal requirements exist to disclose FRT use to defendants in criminal cases.

- Human reviewers alone aren’t a sufficient safeguard against FRT risks. They tend to exhibit “automation bias,” favoring system-generated matches.

- Personnel often lack the training to reliably identify FRT errors. Homeland Security Investigations (HSI) is the only DHS agency requiring staff to complete FRT training before use.

- The HUD promotes FRT through grants to Public Housing Agencies (PHAs). Yet, the department doesn’t regulate or oversee its use.

The USCCR proposed key measures to address these concerns. It recommended that agencies publish FRT use policies, audit compliance, and ensure vendors deliver updates and support.

AI and Data Privacy, Consent, and Misuse

Coined by Roger Clarke in the 1980s, the term “mass dataveillance” involves monitoring digital data. And it’s central to modern AI surveillance technology.

These systems may collect extensive personal data—facial features, behaviors, and locations—often without explicit consent. This issue raises serious AI concerns around data misuse and privacy, including:

-

- Unauthorized Access and Data Breaches

Surveillance systems are frequent targets of cyberattacks. In November 2024, hackers linked to China intercepted surveillance data intended for US law enforcement.

- Unauthorized Access and Data Breaches

-

- Potential for Human Rights Violations

Surveillance technologies are linked to abuses, particularly in authoritarian regimes. That’s why the EU introduced guidelines to limit the export of cyber-surveillance tools that could facilitate internal repression.

- Potential for Human Rights Violations

-

- Lack of AI Regulatory Oversight

Unregulated surveillance tools pose significant threats to privacy and human rights. A 2022 United Nations report emphasized the risks posed by spyware and surveillance technologies operating without comprehensive legal frameworks.

- Lack of AI Regulatory Oversight

-

- Biometric Data Dangers and Misuse

In her 2019 Ted Talk, award-winning journalist Madhumita Murgia warned of the risks associated with biometric data collection:

- Biometric Data Dangers and Misuse

She argues that, by freely handing over this personal information, we risk exposing ourselves to crime, discrimination, and large-scale misuse.

Ethical AI Dilemmas and Case Studies

The ethical implications of artificial intelligence surveillance are far-reaching. From privacy breaches to legal disputes, these cases reveal the risks of unchecked technology. They also highlight the urgent need for stringent accountability and regulation controls.

Clearview AI’s Not-So-Clear Legal Controversies

Clearview AI, a US-based company, built a massive biometric database. How? By scraping over forty billion face images from public online platforms. (All without consent from the eight billion of us that make up the world’s total population.)

Despite widespread use, Clearview AI faces a host of legal challenges in the US and abroad. Here are a few examples:

- Illinois

In June 2024, Clearview AI settled a lawsuit alleging privacy violations due to its extensive collection of facial images.

- California

In May 2022, Clearview AI settled another lawsuit with the American Civil Liberties Union (ACLU) in California. It agreed to restrict the sale of its facial recognition database to most businesses and private entities nationwide.

- Vermont

In March 2020, Vermont’s Attorney General also filed a lawsuit against Clearview AI. It pertained to consumer protection and data privacy violations.

In the UK, the court overturned a £7.5 million fine. France, Italy, and Australia ordered Clearview AI to cease data collection, citing GDPR and privacy law breaches. Canada and Sweden also found Clearview in violation of privacy laws in 2021.

The company claims that it lawfully collects publicly available images. Still, this defense raises pressing questions:

- What constitutes “publicly available” data, anyway?

- Should we permit private entities to collect and use public data without consent?

- How can governments ensure that companies don’t misuse or exploit personal data?

- Who regulates such data collection practices?

Graham Reynolds from the International Association of Privacy Professionals explored this issue in more detail. In The “Paradox” of Publicly Available Data, he wrote:

“Therein lies the privacy paradox — laws permit individuals to revoke public access in one sphere, while failing to address continued availability through unregulated third-party channels.

[…]

Moreover, unchecked data collection poses increasing societal risks, including biased AI models based on faulty web scraping, profiling and information manipulation.”

Alarm Bells for Audio Surveillance in France

In 2024, France’s Orléans Administrative Court ruled against AI-powered audio surveillance systems linked to public CCTV. The court found that deploying microphones in public spaces without legal authorization violated fundamental rights. This precedent emphasizes the importance of explicit legal frameworks for machine learning security and surveillance systems.

The ShotSpotter Scandal: When Flawed AI Leads to False Accusations

In 2021, Chicago jailed Michael Williams for nearly a year. Prosecutors primarily based the murder charge on an alert from ShotSpotter, an AI gunshot detection system.

But an Associated Press investigation revealed “a number of serious flaws” in using the ShotSpotter alert as evidence. It found that the AI surveillance system can often mistake non-gunfire sounds for gunshots.

Despite this and the lack of physical evidence or motive, the authorities arrested Williams. The court ultimately dismissed the charges, and Williams filed a federal lawsuit. But how many similar cases fly under the radar?

As The Innocence Project stated:“Although its accuracy has improved over recent years, this technology still relies heavily on vast quantities of information that it is incapable of assessing for reliability. And, in many cases, that information is biased.“

The Problem with Predictive Policing

Predictive policing employs AI algorithms to forecast potential criminal activity. Early adopters of this “Minority Report-esque” practice include the LAPD and the Chicago PD.

Once promoted as a means to enhance security, fairness, and efficiency, predictive policing tools are riddled with critical flaws:

- They rely on arrest data that in itself reflects patterns of discriminatory policing rather than actual crime. This perpetuates existing biases and leads to the over-policing of minority communities.

- Limited studies also show minimal benefits. These include slightly reduced incarceration rates or better identification of low-risk individuals. But these gains are often overshadowed by systemic flaws.

Public outcry has forced cities like Chicago and Los Angeles to ban, restrict, or shelve predictive policing programs. Lawmakers and advocates now demand transparency, regulations, and independent audits to safeguard civil liberties.

But with companies like SoundThinking (previously ShotSpotter) acquiring parts of Geolitica (previously PredPol), where is AI surveillance technology heading?

SoundThinking rebranded predictive policing tools like PredPol as “deployment tools,” though WIRED found that their functionality remains similar. Critics argue these tools rely on biased historical data, disproportionately targeting low-income and minority communities.

An interactive courtroom game by MIT Technology Review does an excellent job of highlighting these baked-in algorithmic biases.

Critics also argue that the tools are ineffective. An analysis by The Markup found that PredPol had a success rate of less than 0.5%. In fact, the LAPD dropped PredPol in 2020 due to inefficacy.

Despite claims of innovation, SoundThinking’s quiet expansion raises newfound concerns about the increasing privatization of public safety technology.

The question remains:

Is this form of AI technology helping justice—or just hiding injustice behind a digital mask?

Legal Frameworks and AI Regulatory Gaps

The rapid adoption of AI surveillance technologies has outpaced existing legal frameworks. Here’s what a regulatory gap analysis by Wang et. al. (2024) revealed:

- The US lacks a comprehensive federal law for facial recognition technology. This has led to a patchwork of sector-specific and state-level regulations.

- States like Illinois and California have enacted biometric privacy laws. Still, the absence of a uniform federal standard means nationwide protections are inconsistent.

- The European Union’s GDPR addresses data privacy but may not fully capture the complexities of facial recognition technology. This is particularly true for consent and data minimization in public surveillance contexts.

The ethical concerns of AI surveillance technology are only becoming more pervasive. Governments worldwide need comprehensive measures to address them.

Current Global Legal Perspectives on AI Surveillance

As the adoption of AI surveillance technology expands, countries still grapple with regulation hurdles. Maintaining a delicate balance between innovation, public safety, and civil liberties is no easy feat.

United States

The US lacks a unified federal framework for artificial intelligence governance, resulting in uneven state-level protections. That said, the Office of Management and Budget (OMB) adopted a 2024 AI policy. This government-wide initiative emphasizes transparency, equity, and privacy.

The Blueprint for an AI Bill of Rights also outlines guiding principles. These include the prevention of algorithmic discrimination and data privacy protection. While such measures signal progress, they fall short of comprehensive, enforceable legislation. The regulatory gaps are still significant.

Several federal legislative proposals aim to regulate the use of AI surveillance technologies. While slow-moving, some notable initiatives include:

Proposes making NIST’s AI Risk Management Framework mandatory for government agencies to ensure risk assessments and accountability.

Would require companies to conduct bias impact assessments for automated systems, emphasizing transparency and fairness.

- Executive Order on AI Development

Directs federal agencies to implement guidelines ensuring responsible and rights-protective AI deployment.

These legislative efforts reflect a growing recognition of the need to balance technological innovation with ethical considerations. Will they ultimately succeed? Only time will tell.

European Union

The EU’s Artificial Intelligence Act came into effect on 1 August 2024. It categorizes AI applications by risk, imposing stricter rules on high-risk technologies, including surveillance. While it aims to protect civil liberties, debates persist over balancing innovation with regulation.

Asia

China’s extensive use of AI surveillance technology often lacks transparency—which raises human rights concerns. Meanwhile, Japan and South Korea are crafting ethical AI frameworks, though enforcement and public trust are still developing.

United Kingdom

The UK GDPR and the Data Protection Act 2018 govern the collection and use of personal data. The Investigatory Powers Act 2016 also provides legal oversight for public authority surveillance. In 2024, the UK further committed to ethical AI governance. It signed the Council of Europe’s Framework Convention on Artificial Intelligence and Human Rights.

Legitimate vs. Unlawful Surveillance

State surveillance can be a powerful tool for legitimate purposes. After all, public safety and crime prevention are ongoing and ever-evolving societal issues. But advancements in (largely unregulated) AI technologies have only expanded the reach of surveillance.

This, in turn, has enabled both democratic and autocratic regimes to adopt systems with unprecedented capabilities. Remember China’s Skynet? That’s why 36 governments, including the US, adopted Guiding Principles on Government Use of Surveillance Technologies.

The evolution of these systems blurs the line between protection and intrusion. The US government itself acknowledges as much:

“In some cases, governments use these tools in ways that violate or abuse the right to be free from arbitrary or unlawful interference with one’s privacy. In the worst cases, governments employ such products or services as part of a broad state apparatus of oppression.”

According to the AIGS Index, three principles under international human rights law are essential for lawful surveillance:

- Domestic laws must be precise and accessible.

- Actions must meet the “necessity and proportionality” standard.

- The interests justifying surveillance must be legitimate, focusing on national security.

Despite these high standards and principles, even democracies with strong oversight mechanisms often fail to fully protect individual rights.

Ethical AI Surveillance: Actuate AI’s Approach

At Actuate AI, we redefine ethical AI-enabled video surveillance by focusing on object detection and computer vision rather than invasive biometric tracking. With over 80,000 cameras deployed, our privacy-by-design approach ensures public safety while respecting individual rights.

Our advanced machine learning models, trained on millions of security camera frames, deliver unparalleled accuracy. With real-world feedback, we’re always refining performance to drive our low false alert rates even lower.

This is how we continue to uphold our industry-leading detection standards.

Rather than compromising civil liberties, we continuously innovate with products like fire detection software, for example. Actuate AI proves that cutting-edge technology and privacy-first principles can coexist.

Finding a Balance: How Far Should We Go?

Is AI surveillance technology a natural and much-needed step in our societal evolution? Or is it a dangerous slide into Orwellian control?

As Orwell himself wrote, “[The masses] never revolt merely because they are oppressed.”

In the age of AI and privacy dilemmas, the real question goes beyond acknowledging surveillance. It’s about whether we accept it and under what conditions.

Will we demand accountability, transparency, and ethical AI boundaries? Or will we allow convenience and fear to dictate the trade-offs for our freedoms?

AI surveillance technology is not inherently good or bad—it’s a tool.

Its impact depends on how we choose to wield it.

Ready to reimagine what AI surveillance can do?

Discover the real-world benefits of Actuate’s AI surveillance software and learn how it empowers smarter, ethical-first, and future-ready security solutions.