Real-time video analytics AI enables security professionals to improve their surveillance operations drastically.

However, it is crucially important to consider an organization’s operational requirements and limitations in anticipation of an analytics deployment.

This guide provides information on how a “smart” aka AI video surveillance system is architected, its capabilities and limitations, and the trade-offs of processing the analytics on the cloud vs. on-premise.

What is Smart Video Surveillance? The basics of camera architecture and design strategy

Smart Video Basics

Smart video surveillance involves integrating analytics into an organization’s surveillance camera architecture. The results of such integrations can include improvements in threat detection, operating efficiencies, and solve numerous other common pain points.

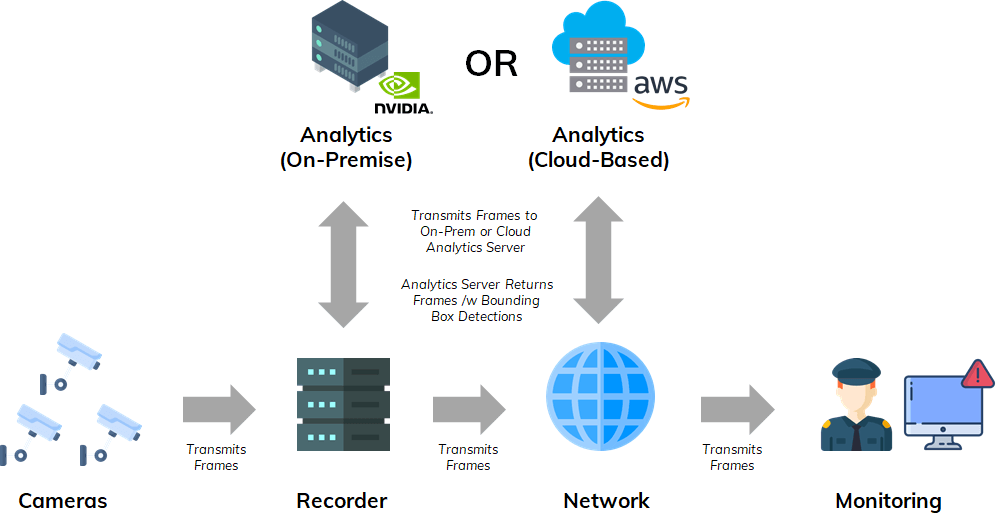

At its most basic level, a modern, well-designed AI video surveillance architecture consists of five main components: cameras, recorder, network, monitoring, and analytics.

What is the Goal of Your Surveillance System?

Deciding which hardware, analytics, and monitoring specifications are necessary when deploying smart video surveillance depends on your organization’s operational requirements. For example, a small grocery store in an Atlanta suburb has very different requirements than a mid-sized urban school district with eight buildings.

When designing a smart video surveillance system, the primary questions to consider are the following:

- Which threats need to be mitigated, and which types of AI video analytics would be more effective at doing so?

- What are my resource constraints in hardware, bandwidth, and monitoring, and how do these constraints impact the type, accuracy, and performance of the analytics I can adopt?

Ultimately, the use of video analytics is possible on almost all systems, but the components available will determine overall system performance. An Actuate consultant can provide an analysis of what’s possible after gathering information on your current architecture.

Video Analytics Explained Which type of analytics is right for your organization?

Operational requirements significantly impact “smart” video surveillance deployment, which is why it is critical to determine what type of video analytics is best for your organization’s needs. Below we explore the main kinds of video analytics, along with their strengths and weaknesses.

- Video Motion Detection

- Basic Analytics / False Positive Reduction

- Advanced Analytics

- Facial Recognition

The most basic version of camera-based analytics is video motion detection (VMD). Motion detection works by analyzing changes between pixels from one frame to the next, triggering an alert whenever the difference exceeds the sensitivity threshold.

Strengths:

- Low-cost, does not require additional computing power provided by an on-premise device or a cloud-based service.

- Can be effective when deployed in the right environment; for example, indoor environments outside of business hours.

- Can be layered to reduce bandwidth and compute consumption on AI-based analytics.

Weaknesses:

- Highly prone to false positives in most deployment environments due to possible sensitivity to swaying vegetation, animals, and even changing shadows and lighting.

- Can overwhelm a monitoring operation if set up in areas that are prone to false positives.

- Can lead to missed alerts if motion sensitivity is set too low (in an effort to reduce false positives).

Takeaway: In most situations, video motion detection alone is insufficient in setting up an effective AI video surveillance system. However, when layered as a pre-processing layer for AI-based analytics, VMD can significantly improve cost and bandwidth efficiencies by filtering out frames that do not need to be processed.

Instead of analyzing changes in pixels between frames, basic AI analytics analyzes each frame independently and looks for specific objects that a deep learning algorithm can identify. Compared with VMD, basic AI analytics offer an additional layer of nuance, for example: “that is a human” or “that is a vehicle.”

Strengths:

- Adds an additional degree of nuance over VMD, which can reduce the number of false positives processed by security staff.

Weaknesses:

- Basic analytics cannot determine the behavioral context of object interactions. For example, basic analytics can identify human beings and ignore squirrels, but it can’t filter any further (i.e., “there are a group of three people loitering in the stairwell” or “a person is carrying an AR-15 on the East Wing of the building”). These capabilities require more advanced models of analytics.

Advanced analytics takes the concept of “object detection” a step further with its ability to detect small objects in grainy security camera feeds and draw conclusions based on how objects in surveillance feeds interact.

Examples of advanced analytics may include:

- Gun Detection

- Mask Detection

- Social Distancing Detection

- Loitering Detection

- Crowd Detection

- Slip/Fall Detection

- Fight Detection

Takeaway: With advanced analytics, security professionals can dramatically improve “incident-driven response”—all the way down to the camera level. For example, a Director of Security for a housing development may want to set up alerts for loitering incidents in the stairwells, gun detection on all indoor cameras covering hallways and lobbies, and human detection on perimeter cameras outside of business hours. In instances such as these, advanced analytics can configure each camera to support each security operations objective.

Facial recognition is a form of advanced analytics that first detects a face, then attempts to match that face to a database of other faces. Facial recognition requires a much higher resolution (pixels-per-foot) than other advanced analytics.

As a result, facial recognition often requires specialized hardware and can be exceptionally expensive—a leading provider of facial recognition technology charges $800-$1,200 per camera a month, excluding the hardware price.

Additional use cases for this type of analytics is limited and may include:

- Automated access control

- Enforcing a list of people that must be denied entry

- Interdiction of repeat shoplifters

One thing to note is that the deployment of facial recognition has become increasingly controversial and contested across the country. In September of 2020, the City of Portland passed a ban on facial recognition. What’s more, a school district in Upstate New York was sued by parents after signing a $2.5 million facial recognition contract, forcing the district to stop using the technology after Governor Cuomo issued a moratorium.

Takeaway: With concerns about civil liberties and privacy rising, any decision to adopt facial recognition analytics should be deliberated thoroughly.

Capabilities Not Possible With Current Technology

As video analytics experts, our clients often ask whether we can develop specific capabilities to meet varying operational requirements. Usually, the answer to their question is, “If the incident is objective, we can build it. If it’s subjective, then we can’t.”

Enter your information to receive your free guide.

How Cameras Impact Analytics Deployments Cameras: Analog, IP, and Smart

Until the last decade, there was a healthy mix between analog cameras and IP cameras’ deployment. Now, most of the cameras currently deployed are IP cameras. As a result, the debate is usually not centered on whether you should install IP vs. Analog—but instead on whether it’s better to install traditional IP cameras or opt for hardware-accelerated “smart” cameras. In this section, we discuss how the cameras within your install base impact the type of analytics that you’ll need to adopt.

Pixels per Foot

The definition of objects in surveillance camera footage is defined by the camera resolution and the distance of the object from the camera. It is measured by a metric called “pixels per foot” (or pixels per meter, if you prefer the metric system).

Analog Cameras

In order to transmit surveillance camera footage over a network, the footage needs to be encoded into a digital signal. In analog cameras, the signal is unencoded and typically either recorded to a local hard disk that needs to be manually retrieved or transmitted to a digital encoder or a video recorder with an encoder built-in.

IP Cameras

The biggest difference between IP cameras and analog cameras is that IP cameras conduct the digital encoding and compression on the device itself. The vast majority of currently deployed cameras are IP cameras, and both basic analytics and advanced analytics should work well on IP cameras, as long as they are 720p or better.

Smart Cameras

Smart cameras require a series of matrix algebra calculations that are incredibly resource-intensive and best performed on a particular type of computer chip known as “Graphical Processing Units” (GPUs).

Enter your information to receive your free guide.

Video Recorders and Analytics Deployments Understanding Recorders: DVR, NVR, and VMS

Just like camera deployment, video recorders are another important factor that security professionals must consider when creating their “smart” surveillance architecture. There are three main types of recorders worth considering: digital video recorders (DVRs), network video recorders (NVRs), and video management systems (VMSes).

- Network and Digital Video Recorders (NVR and DVR)

- Video Management Systems (VMS)

NVR systems are similar to DVRs but are deployed with digital cameras. Instead of connecting to the NVR via coaxial cables, cameras are connected via ethernet cable or wirelessly. Like DVRs, NVR appliances are designed for smaller deployments–usually up to 32 channels.

Analytics can be deployed with NVRs, but usually require the analytics provider to build an API integration with the NVR manufacturer to enable streaming of camera feeds to either an on-premise or cloud-based GPU server.

In cases where integration cannot be built, an analytics provider can pull a secondary stream directly from individual cameras into an analytics server. Still, the alerts will have to be delivered separately instead of through the NVR’s user interface.

A VMS is enterprise software typically installed on a local desktop computer or server that enables the customization and management of surveillance camera feeds. A VMS’s functionality is similar to an NVR’s but allows for additional flexibility since it’s not restricted to a fixed appliance.

The Benefits Of Adopting a VMS Over an NVR Appliance:

- There are no upfront hardware costs—as long as you have a desktop computer that can host the VMS or run the VMS on a virtual machine.

- A VMS has the flexibility to support more channels by adding additional network switches.

- VMS software’s growing popularity over NVR appliances means that you would be more likely to receive continued updates and technical support.

- Analytics providers will be more likely to have API integrations with the major VMS brands than NVR devices. These integrations can enable reduced bandwidth, compute consumption, and the ability to pull video through a single server instead of dozens, hundreds, or even thousands of individual cameras.

The Potential Cons of Adopting a VMS: A VMS typically requires more technical and networking expertise to install, which may not be a problem for larger organizations, but could present a layer of additional complexity for smaller ones.

Takeaway: If analytics support is a significant contributing factor to the decision between a VMS or an NVR appliance, we recommend that you opt for installing a VMS. As VMS continues to take market share, NVRs are gradually losing ground and will likely follow the path of analog cameras in near-obsolescence in the future.

Enter your information to receive your free guide.

Strategies for Minimizing Bandwidth Consumption Strategies and techniques to consider when integrating analytics.

A common concern for security professionals when evaluating cloud-based analytics installations is bandwidth. They are often worried that deployments will result in a significant slowdown of the company network. What’s more, in cases of metered connections (usually remote sites where a 4G connection provides service), the additional cost can be significant. Fortunately, there are several techniques that analytics providers can leverage to minimize the amount of bandwidth consumed: image compression, motion pre-processing, and limited frame sampling.

- Image Compression

- Motion Processing

- Limited Frame Sampling

Image compression is an excellent way for analytics solutions to minimize the amount of bandwidth required. Modern compression methods, such as *H.264 and *H.265, dramatically lowers the bitrate needed for video transmission by sampling several partial frames (p-frames) for every complete frame (i-frames) sampled.

*Most cameras / VMSs manufactured within the past decade will support H.264, if not H.265.

Most video management systems and certain cameras provide Video Motion Detection (VMD) capabilities, meaning that they can trigger an event alarm whenever the camera detects motion. As mentioned in The Different Types of Video Analytics Explained, VMD is not a practical standalone solution in most situations due to the high frequency of false positives.

Still, it’s important to note that cameras (and VMSs) can be configured to send frames to an analytics server upon the detection of motion, reducing bandwidth consumption by 80% – 90% in certain cases.

Limiting frame sampling is another technique that analytics providers often leverage to minimize bandwidth consumption. Top-performing analytics models don’t need 10 or 20 frames per second (fps) to deliver accurate results. For most analytics solutions, sampling at 1 fps is all that is necessary to trigger accurate detections.

However, some forms of advanced analytics, such as mask and gun detection, will require up to 3 fps to minimize the probability of a missed detection on a small object that may only appear briefly in a surveillance camera feed.

An analytics provider with image compression, motion pre-processing, and limited frame sampling can deliver high-performing analytics at an expected bitrate (somewhere between 20-100 kbps, per camera)—without impacting detection accuracy.

Enter your information to receive your free guide.

On Premise vs. Cloud Deployments How to determine which deployment is right for you.

When selecting an analytics provider, one of the most critical decisions is deciding whether to host on-premise (often known as “the edge” or “edge computing”) or in the cloud.

An on-premise or edge deployment means that the computers that process the analytics are on-site, whether in the form of “smart cameras” or a GPU server. Cloud deployment means video feeds are sent to servers run by a cloud services provider, such as Amazon, Microsoft, Google, or IBM.

Strengths

- Lower monthly recurring cost, since computing costs are paid for with upfront investment.

- Camera networks can be kept entirely on the LAN, reducing potential cybersecurity risks.

Weaknesses

- High upfront costs for hardware (whether it’s GPU servers or smart cameras)

- Cost inefficient, as it requires upfront investment in hardware that will often sit idle as much as 70-80% of the time.

- More difficult to scale—as doing so will often involve additional hardware installations. Patches and software improvements to the AI algorithms are more difficult to implement.

- Some smart camera brands are completely incompatible with legacy systems (e.g. Verkada) and permanently lock you into their service for the lifecycle of the hardware.

Strengths

- Works on legacy camera infrastructure—no additional hardware costs required.

- Maximizes cost-efficiencies, as cloud service providers can auto-scale pooled resources according to consumption, allowing analytics providers to pass the cost savings onto the customer.

- Easy to scale—without hardware implementation required, a deployment of thousands of cameras can take just a couple of weeks instead of months.

- Patches and model improvements are instantaneously implemented on a regular basis with the stroke of a key, ensuring that the AI models deployed are always the best available technology.

- Compatible with most legacy hardware deployments

Weaknesses

- While a VPN connection is extremely secure, it will never be as secure as an on-premise deployment that is kept completely off of the internet.

- Certain sites with significant bandwidth limitations (e.g. retail outlets on T1 connections) will not be able to support more than a handful of cameras.

- While latency is rarely more than 1-2 seconds, alerts will not come in as fast as on-premise deployments.

Takeaway: Ultimately, compared with on-premise deployments, cloud-based analytics deployments are much more cost-friendly, easier to update, and more scalable. Unless your organization is willing to devote significant resources for a camera infrastructure overhaul, cloud-based deployment is the better solution in most cases.

Enter your information to receive your free guide.

Key Takeaways

Contrary to the popular perception, it is entirely possible to build a high-functioning AI video surveillance architecture that satisfies most organizations’ operational requirements—and at a reasonable cost.

The key to a successful deployment of AI video surveillance is proper preparation. By taking into account your organization’s needs, limitations, and current architecture, you can ensure a successful analytics integration.

Discover What AI Video Surveillance Can Do For Your Organization's Security